New hardware and software tools are making development cycles faster and more cost-effective.

By: David Rice & Michael Williamson, Critical Link

As featured in the January 2019 issue of Photonics & Imaging Technology magazine.

Machine vision systems have traditionally consisted of stand-alone cameras tethered to PC-based boards or frame grabbers using highspeed interfaces such as Camera Link, CoaXPress and Gigabit Ethernet. By transferring this data to host computer memory, off-the-shelf software can be used to analyze the captured images and extract information or make a pass/fail decision. While such systems are effective, they generally rely on a very expensive and space-consuming host computer to perform much of the image processing.

Figure 1: (a) Critical Link’s MitySOM-A10S is an Intel/Altera Arria 10 SoC board that features dual core ARM and FPGA fabric, on-board power supplies, two DDR4 RAM memory subsystems, a micro SD card, optional eMMC, on-board RTC, a USB port, and temperature sensors. (b) The MitySOM-A10S development kit provides everything needed to initiate your embedded development project, and includes external interfaces such as USB, FMC expansion connectors, PCI-e expansion headers, and Gigabit Ethernet.

Most commonly, image processing algorithms are implemented using off-the-shelf software packages such as HALCON from MVTec and MATLAB from Mathworks. In recent years, there has been a growing demand to perform preprocessing on the image data before it is transferred from the camera to the PC. As a result, many of today’s cameras feature on-board FPGA fabric to perform such functions as Bayer interpolation, image enhancement, and noise reduction directly on the cameras themselves.

While adding an FPGA to the camera introduces additional capability, it does not address the never-ending push to reduce the size, weight and power (SWaP) and recurring cost of imaging and machine vision systems, particularly applications such as portable medical instrumentation, robotics, sensory augmentation systems, and unmanned aerial vehicles. To achieve dramatically lower cost and SWaP, alternatives to PC-based systems must be considered.

ALTERNATIVE APPROACHES

In the past, designers needing to eliminate the PC have had the option to either develop a complete “smart camera” from scratch or use an off-the-shelf camera with custom-developed embedded processor/ FPGA boards to host the image processing software. Both of these options require extensive schedule and engineering resources to develop, and can be expensive to produce if volumes are not high.

The advent of the System on Module (SOM) has allowed a third option, which is to select an off-the-shelf processor board (the SOM) and develop simplified interface boards to connect cameras designed with off-the-shelf modules or custom-designed sensor boards. Such SOMs typically contain one or more processors (RISC or DSP, or both), FPGA fabric, RAM, and I/O capability, while supporting multiple operating systems. SOMs are small (2” × 3” for example), so they can fit into small form factor industrial cameras. An interface board is then used to connect the SOM to external interfaces such as PCI, Gigabit Ethernet, USB3, and CAN Bus.

The cost of developing a SOM-based system is significantly lower than that of developing a complete camera/processor system, since the most complex aspects of design and layout have already been performed and tested by the SOM manufacturer. Then, the interface board design generally requires much lower design effort, especially when using pre-existing development board schematics and design files as a starting point, which some SOM suppliers make available. Furthermore, the SOM supplier manages parts procurement and component obsolescence for the SOM, eliminating maintenance and support responsibilities for the camera designer for the lifespan of the product.

One example of such a SOM is Critical Link’s MitySOM-A10S, an Intel/Altera Arria 10-based board that features up to 480KLE FPGA fabric, onboard power supplies, two DDR4 RAM memory subsystems, a micro SD card, optional eMMC, on-board RTC, a USB 2.0 port, and temperature sensors (Figure 1). With an Arria 10 SX processor that features dual-core Cortex-A9 32-bit RISC processors with dual NEON SIMD coprocessors, the multiprocessing unit (MPU) is capable of running any number of commercial operating systems, bare metal, and embedded Linux.

PARALLEL DEVELOPMENT

It is common for SOM suppliers to offer development kits that include a full-featured base board with multiple interfaces such as USB2, USB3, RS-232, FMC expansion connectors, PCI-e expansion headers, Gigabit Ethernet, HDMI, and CAN Bus. Some suppliers have started offering development kits specifically for embedded imaging projects that feature popular machine vision sensors. Regardless of which kit is selected, using an off-the-shelf development kit means that software and hardware development can occur in parallel, which can drastically speed time to market.

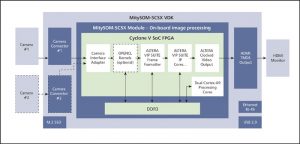

Figure 2: The embedded vision development kit uses a MitySOM-5CSx Module with an interface board. The development kit enables rapid prototyping and provides an open architecture for developers to embed off-the-shelf IP or their own proprietary image processing algorithms.

Development kits give hardware designers the option to use manufacturer-provided schematics and design files as a starting point to test out the validity of their designs before committing to their custom interface board. As hardware engineers develop the necessary interfaces, software engineers can choose from numerous commercial and open source image processing libraries to develop the final product. One such library is the Video Image Processing (VIP) suite from Intel, a library of FPGA intellectual property (IP) that includes such functions as color space conversion, up/down image scaling, frame rate conversion, image convolution, video I/O formatting, and thresholding.

Other off-the-shelf packages include Mathwork’s Image Processing Toolbox that provides a set of algorithms for image segmentation, enhancement, noise reduction, geometric transformations, image registration, 3D image processing and HALCON from MVTec that offers FPGA RTL export of the software’s image processing libraries.

PROGRAMMING FPGAS

Although the idea of using an FPGA for image processing can be daunting without the right experience, new tools exist to make such development easier. To program the numerous system logic elements, registers, memory blocks, and multiplier/accumulators that comprise the FPGA fabric of these devices, developers can use software such as Intel’s Quartus Prime. This enables analysis and synthesis of hardware description languages (HDLs) such as VHDL and Verilog to describe the structure and behavior of digital logic circuits.

Once written in an HDL, the design can be compiled, timing analysis performed and the on-board processors and FPGA configured to perform the required function. Quartus Prime, for example, includes an implementation of VHDL and Verilog for hardware description and visual editing of logic circuits.

A number of development tools are available to speed up the programming of such devices for image processing and machine vision applications. One approach would be to use Open Computing Language (OpenCL), a framework for writing programs that executes across CPUs, GPUs, DSPs and FPGAs. Using this method, a programmer would write code in C/C++ and test and validate the code on a PC. Once verified, processing kernels can be recompiled to register-transfer level (RTL) and dispatched to FPGA gate logic.

A similar approach would involve using the Open Source Computer Vision Library (OpenCV), a C++-based open source computer vision and machine learning software library for computer vision applications. OpenCV has more than 2,500 libraries of algorithms used to detect and recognize faces, identify objects, classify human actions in videos, track camera movements, track moving objects, extract 3D models of objects, and produce 3D point clouds from stereo cameras.

A more advanced approach, however, involves high-level synthesis (HLS), also known as C synthesis. With this method, code written in C/C++ or MATLAB is analyzed and transcompiled into a hardware description language (such as VHDL) which is, in turn, synthesized to the gate level using a logic synthesis tool.

Both OpenCL and HLS usually result in a substantial reduction in implementation time since the software is easier to write and, more importantly, iteration and/or modification can be more easily performed.

EMBEDDED IMAGING DESIGN

While FPGAs perform pipelined functions very efficiently and quickly, there are limitations to FPGA-only based designs. Functions such as high/low pass, thresholding, and Bayer transformations can be partitioned to the FPGA. However, others that require the complete image to be iteratively analyzed may be better suited for running on an embedded CPU or GPU.

As an example, the Dart board level camera series from Basler features an FPGA on the sensor board that does fixed image pre-processing. Beyond that, advanced image processing and analytics would have to be performed outside the camera’s FPGA. This is performed using a SOM module, which is included in the Dart’s embedded vision development kit (VDK) (Figure 2).

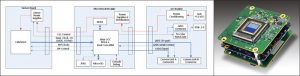

Figure 3: Fixed pre-processing functions provided by the sensor board’s FPGA include noise reduction, white balancing, de-Bayering, and an edge detection algorithm (shown here, on left) which runs onboard a SOM without the support of a PC.

The VDK consists of the Basler Dart camera module, an LVDS cable connection to an interface board which features HDMI, Ethernet, USB 2.0, and GPIO output, and a SOM. Image preprocessing functions such as Bayer conversion, bad pixel correction and noise reduction take place on-board the camera module. This leaves developers free to use the SOM’s on-board ARM and FPGA fabric for their own image processing functions (Figure 3).

This modular concept of sensor board, processor board, and interface board is taken a step further with Critical Link’s MityCAM architecture. Figure 4 shows a block diagram of a three-board system where each board allows for a certain level of interchangeability depending on the requirements of the system. CMOS and CCD sensor boards are available with an array of image sensors from top manufacturers, and new sensors can be integrated quickly and cost effectively.

Figure 4: (a) Block diagram of Critical Link’s MityCAM architecture, a three board system that features an array of CMOS and CCD sensor options, two processor boards with ARM & FPGA fabric open for user programming, and interchangeable interface boards with options for Gigabit Ethernet, USB3, USB2, Camera Link, CoaXPress, and HDMI. (b) An example of a 3-board camera system in its stacked configuration.

The central processing board provides options based on SOMs, both of which feature ARM and FPGA fabric in an open architecture for developers to embed their selected image processing functions. Interface boards can be selected with dual Camera Link, USB3, Gigabit Ethernet, HDMI, USB2, and CoaXPress. Regardless of the individual board selected, the system can be arranged in a stack that uses strong board-to-board connectors, and is available in production volume with or without mechanical packaging.

While embedded imaging and machine vision systems programming may initially appear complex, the number of hardware and software development tools now available are making these development cycles faster and more cost-effective. In applications such as portable instrumentation, autonomous systems, and process automation equipment, embedded systems will reduce the size, weight and power required and the upfront investment of both schedule and engineering resources.