A successful transition to an embedded platform requires that system developers define and manage both short- and long-term goals.

By: David Rice & Amber Thousand, Critical Link

As featured in the July 2019 issue of Photonics Spectra magazine.

Embedded Vision is a hot topic in many industrial spaces. The integration of data processing on board a camera without the need for a back-end PC, server, or other costly system has system developers — in industries from factory test and measurement to agriculture, and from autonomous driving to medical diagnostics — looking for ways to move processing closer to the sensor. Doing so can lead to lower recurring costs, faster performance, and an optimized footprint (that is, low size, weight, power, and cost — or low SWaP-C), and systems that are more agile and able to evolve with changing requirements. This means innovation and growth in industrial markets across the world will be heavily affected by advancements in embedded vision.

Cities, factories, and transportation systems are implementing vision-based technologies to improve efficiency, performance, cost, reliability, and safety.

Moving from a traditional PC- or server-supported vision system to an embedded platform does not happen overnight, and it requires an investment in upfront engineering and development. There are several key factors developers should consider early on when planning to transition from a traditional vision system to a new embedded vision design. Evaluating these factors ahead of time will help ensure a product that meets current system performance requirements, while allowing for application growth and long-term design stability.

Processing technology

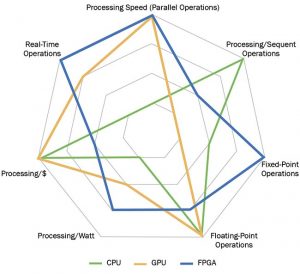

One of the first questions developers may ask is on which processing technology they should base their design. The answer is becoming increasingly complex because processing performance and speed have escalated so rapidly that developers are able to acquire and process images faster, even when implementing higher-resolution sensors (Figure 1).

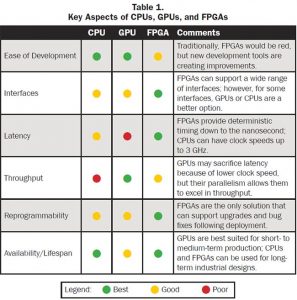

CPUs (central processing units), GPUs (graphics processing units), and FPGAs (field-programmable gate arrays) each offer distinct advantages for embedded vision systems. Furthermore, the growing number of chip and board manufacturers in the marketplace makes the decision not only about the processing technology, but also about the supply chain, quality, support, and longevity of the product being developed.

CPUs

CPUs are easy to program and compile, and they offer versatility in that they can execute a wide range of functionality. Typically, they also include a host of analog and digital peripherals. CPUs perform processing operations in sequence. For vision applications, one operation must run on an entire image before the next operation can begin. Multicore CPUs allow for some level of parallel operation (albeit far less than GPUs or FPGAs), increasing performance but not affecting programmer friendliness.

Given their sequential nature, CPUs remain the best architecture for applications in which algorithms such as pattern matching or optical-character recognition will be used. This type of processing analyzes the entire image and compares it to a master data set. CPUs are most efficient here because of their extremely fast clock speeds and direct access to typically large amounts of memory.

GPUs

GPUs are similar to multicore CPUs in that GPUs are made of a series of logic cores, each of which is capable of performing operations sequentially. GPUs are composed of many more and smaller logic cores, however. So although multicore CPUs — most often with two to four cores — can perform a small amount of parallel processing, GPUs can handle orders of magnitude more, with up to multihundreds of logic cores.

GPUs have grown in popularity in the embedded vision space, in part because they are well suited for applications where simple operations can be performed in parallel and continuously on large data sets. As an example, GPUs offer a tremendous benefit in artificial intelligence (AI) training applications, where the ability to process massive data sets in relatively short amounts of time is essential. When it comes to AI inferencing, however, FPGAs tend to have the best performance, followed by GPUs.

GPUs excel at functions such as video post-processing, and they offer optimized efficiency for floating-point computations and matrix math. The trade-off is that they consume high amounts power, which can pose a challenge in embedded vision systems where portability or low SWaP-C matters.

GPUs tend to be best for consumer designs or products that will go through frequent design upgrades, rather than for industrial, medical, or other long-lifespan products. This is because although the performance lifespan of a GPU can easily extend to a decade, most GPU devices remain on the market for only 18 to 36 months, after which they are considered obsolete and are replaced by next-generation devices.

FPGAs

At the highest levels of processing, FPGAs provide the greatest advantages in speed and flexibility. Given their highly efficient parallel-processing capability, FPGAs are more suitable than CPUs or GPUs for real-time processing and multiple-interface applications. They are reprogrammable, with flexibility for design changes and new functionality that cannot be achieved with any other architecture (Figure 2).

Figure 2. A performance comparison between a CPU, GPU, and an FPGA, noting key image-processing characteristics.

In terms of energy efficiency — an important consideration in embedded systems design — FPGAs are superior to both CPUs and GPUs. This is especially true for performing algorithms based on logic and fixed-precision computations. The two biggest names in FPGAs have supported device families for a decade or more. Going forward, this means developers of industrial, medical, and other long-lifespan applications can depend on device availability and on access to product support for the life of a device’s design.

Traditionally, FPGAs have been the most difficult to program, requiring developers to have special skills in hardware description languages (HDLs), such as VHDL or Verilog. Long cycles to compile a design are also necessary. However, many tools are available today to make programming an FPGA faster and easier, even without expertise in HDLs. Two examples are OpenCL and high-level synthesis (HLS), which enable targeting C/C++ code to the FPGA. Learning to use these tools can take time; fortunately, many manufacturers offer software development kits that make it easier to get started. Developers can then use such tools to leverage other benefits as well, including the ability to accelerate application code, reduce power usage, and drastically reduce compile times.

For an even easier way to program FPGAs, graphical programming tool kits now enable image-processing functions to be implemented using visual-programming pipelines. A growing number of suppliers are launching tools in which image-processing functions — from pixel correction and color processing to trigger functionality and segmentation — can be graphically pipelined and then implemented into the FPGA. These are not yet universal solutions, but expect to see rapid expansion in this area in years to come.

Alternatively, FPGAs allow for off-the-shelf intellectual property (IP) blocks to be integrated into the fabric, bypassing lengthy development cycles and giving streamlined functionality. Many embedded board and camera vendors provide IP blocks already embedded in their solutions for image preprocessing functions such as compression, white balancing, debayering, and noise reduction. Beyond these basics, IP blocks are available from a range of vendors for application-specific processing tasks including object measurement and tracking, stereo vision, motion detection, and many others. IP blocks can also be used to implement a variety of interfaces.

Heterogeneous architecture

In many cases, developers will include more than one processing technology in their design, most often combining an FPGA with either a CPU or GPU. This means the two devices share the processing load, each one taking on the tasks it is best suited to handle.

For example, in a heterogeneous system-on-chip (SoC) architecture where a CPU and FPGA are both present, developers can optimize their design for the strengths of each device. Depending on the interface, the CPU may be best for image acquisition, and then the developer may transfer the image to the FPGA for preprocessing tasks such as noise reduction or debayering. Images can then be processed in parallel in the FPGA or sent back to the CPU for iterative functions. Even with these transfers, such a pipeline can be more efficient than a single- processing architecture.

There is no universally correct answer to the complex question of which processing architecture to choose. Choosing the right technology is a function of multiple factors and is highly dependent on not only the technology but the support system around it.

Design support

Executing a new embedded vision product design typically requires factory support for issues such as sensor integration, software and image processing, interface development, or all of the above. Questions come up throughout the design process that can be answered by an array of sources: data sheets, community forums, application engineering support, and direct factory interaction. Depending on a company’s size and reach, some resources may be more accessible than others. It’s important to consider how support will be delivered for the products you’ve selected (Table 1).

If the in-house team is knowledgeable and has extensive experience in imaging design, access to thorough documentation or community forums may be enough. Previous experience with a sensor, interface spec, and processing technology can mean major efforts will be focused on the application software and processing functions — the IP — that your team is developing.

Although the performance

lifespan of a GPU can easily

extend to a decade, most

GPU devices remain on the

market for only 18 to 36

months.

If the technologies being integrated into the design are not familiar to the team, however, it’s important to understand whether access to application engineering support will be available from the manufacturer or its channel partners. As the pressure to operate more efficiently has grown for major manufacturers, many have pulled back on direct support to customers. In some cases, the manufacturers’ distribution partners have stepped in to provide design support through their local branches.

Alternatively, off-the-shelf components can be selected or an outside engineering services provider can be engaged to help with the design. Either option can stream- line the development schedule and allow the team to dedicate its focus on generating value-added IP. Working with an engineering services provider that is familiar with the technologies and brands selected means many design challenges can be cleared faster, sometimes leveraging existing building blocks. Or, if the upfront investment in cost or schedule is not feasible, off-the-shelf development kits can provide a platform for low-cost, rapid prototyping.

Successful transitions

A growing shortage of hardware engineers means fewer companies have the manpower to develop new production-suitable hardware from the ground up. Open-source platforms that help people learn programming have offered relief, and for many applications, these solutions can be viable for production. However, when it comes to industrial or medical-grade systems, a transition path is needed to ensure long-term production and design stability. The question then becomes: What can be done early on to ensure the prototype is suitable for production, or to ensure that a viable transition plan exists?

This question was partly addressed in the discussion of processing technologies above, showing that certain architectures are designed for longer availability than others. The same is true for image sensors and cameras, as well as for all the other components designed into a new product. When selecting any of these components for a final design, it is very important for the system designer to be aware of availability projections. Working with an off-the-shelf board can often alleviate problems of availability, since the board supplier manages the supply chain and deals with obsolescence issues. Developers should look for a reputable supplier that publishes product change notifications (PCNs), that does not change part numbers frequently, and that will provide patches and engineering support in the event that a change is necessary. These are healthy signs that the supplier maintains its board designs over time.

Low-volume, short-term production, or designs that will go through frequent changes will probably not be affected by the above factors. However, if the product is intended for medium- to high-volume production and/or long-term availability, the design team should factor this in up front when evaluating which processing platform to use.

Flexibility for the future

Finally, developers should know how much flexibility or additional headroom is needed to accommodate future application changes, feature growth, or advanced processing. Some designs remain relatively fixed for the life of the product, with little need to integrate new features or capabilities. Others, however, are used in industries that are constantly evolving (Figure 3). The inability to implement new processing techniques or application software upgrades could hurt sales and cripple return on investment. This is true in areas such as manufacturing and warehousing, smart cities and infrastructure, energy and utilities, and transportation. The wave of embedded vision integration is still far from cresting in these areas, and developers who allow room for their design to grow as technology advances will be better positioned for long-term success.

Figure 3. Embedded imaging systems have already made their way into medical and scientific applications, and they are gaining even more momentum as the health care industry drives toward higher standards and leaner processes.

Meet the authors

Dave Rice is co-founder of Critical Link and vice president of its engineering division. He has led the company’s technology development efforts for more than 20 years. Rice has a bachelor’s degree in electrical engineering from Penn State University; email: david .rice@criticallink.com.

Amber Thousand is the director of marketing at Critical Link, working with engineering on product development. She has a bachelor’s degree from Elon University and a master’s in business administration from the University of Phoenix; email: athousand@criticallink.com.