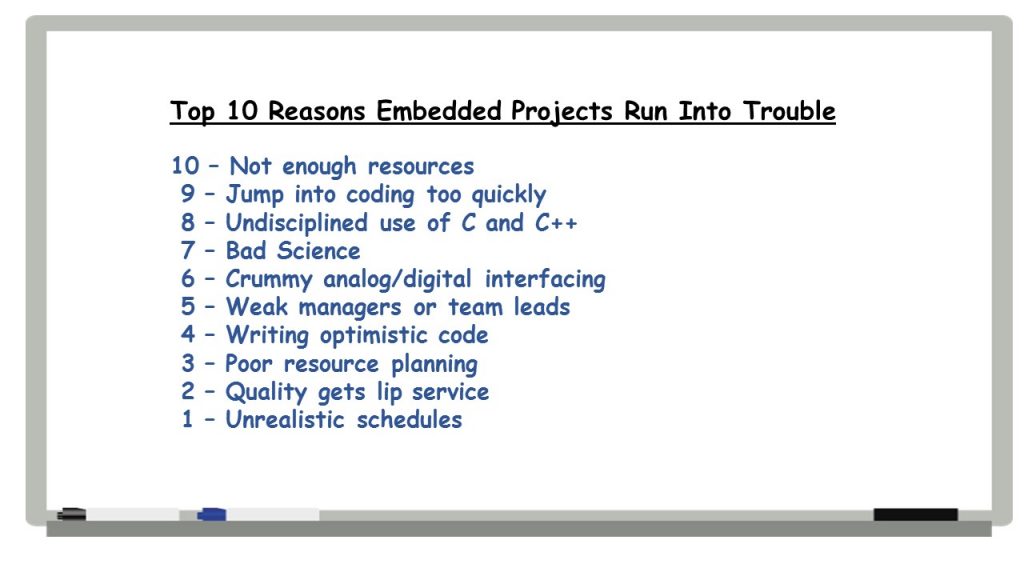

For the last couple of months, my bi-weekly posts have been devoted to Jack Ganssle’s excellent series on the top reasons why embedded projects run into trouble that’s been running on embedded.com. As an engineer who’s been involved in building embedded applications throughout my career, I found myself nodding my head in agreement each time I read one of Jack’s articles. From his number 10 reason – not enough resources – down through his number 1 – unrealistic schedules – I’ve recognized a number of situations that I’ve personally been involved in. But it’s also been gratifying to recognize that, here at Critical Link, we put a good deal of focus on avoiding problems like the ones Jack has on his list.

With that, here’s my summary of Jack’s Top Two.

Some of Jack’s Top Ten – maybe even all of them – have issues around quality at their core. Here he addresses it directly.

Jack points out that American cars used to have a terrible reputation for quality. Then the Japanese began entering the market with cars that were both higher in quality and lower in price than American vehicles. Then American car manufacturers paid a few visits to Japan and discovered what the Japanese had learned from American quality gurus like W. Edwards Deming: “that the pursuit of quality always leads to lower prices.” These days, quality is no longer a problem for American car makers:

Today you can go to pretty much any manufacturing facility and see the walls covered with graphs and statistics. Quality is the goal, and it is demanded, measured, and practiced.

Alas, Jack finds that, in firmware labs, there tends to be less of a focus on quality. He wants developers to spend more time on test, and not let it wait until the end of a project. Because we all know that, if schedules get tight, something’s got to give and if testing is on the tail end of the schedule, well…

But test is not enough. Quality code happens when we view development as a series of filters designed to, first, not inject errors, and second, to find them when they do appear.

We’re not a firmware lab, per se, but the point about testing and best efforts to “not inject errors” is well taken.

I’m very proud that, at Critical Link, we take quality very seriously across the board. (Sorry for the pun. I couldn’t resist.) I’d be happy to talk to anyone about our quality focus, but to take a shortcut, I’ll note that we’re ISO 9001:2015 certified.

Jack’s #1 in this series of articles is one he characterizes as “a fail that is so common it could be called The Universal Law of Disaster:” unrealistic schedules. In his discussion of this problem, he catalogs the reasons why those impossible schedules are something that we universally (and perpetually) have to grapple with as developers. He talks about top-down pressures to hit aggressive dates in order to secure a project.

These pressures aside, he doesn’t let the engineers doing the estimating off the hook:

Too often we do a poor job coming up with an estimate. Estimation is difficult and time consuming. We’re not taught how to do it… so our approach is haphazard. Eliciting requirements is hard and boring so gets only token efforts.

Jumping into coding too quickly is #9 in Jack’s top 10. As I noted in my commentary on that reason, “at Critical Link, our experience as systems engineers means we place tremendous importance on planning and being careful about our requirements.” I’ve found that, if you put the time in on the front end to very thoughtfully figure out what the exact requirements are, you’re rewarded with a much more realistic schedule.

There are, of course, invariably changes to a project. That’s just the nature of the development beast.

There will always be scope modifications. The worst thing an engineer can say when confronted with a new feature request is “sure, I can do it.” The second worst thing is “no.”

“Sure, I can do it” means there will be a schedule impact, and without working with the boss to understand and manage that impact we’re doing the company a disservice. The change might be vitally important. But managing it is also crucial.

“No” ensures the product won’t meet customer needs or expectations. At the outset of a project no one knows every aspect of what the system will have to do.

By the way, the answer to allowing some requirements creep without impacting the schedule is NOT to “throw more resources at it,” which Jack sees as “usually an exercise in futility.” Best to a) do solid planning and careful scheduling from the get-go, and b) keep everyone who needs to be in the loop when the schedule looks like it might slip.

Anyway, that’s it for the Top Ten Reasons Embedded Projects Run into Trouble. Good things to keep in mind, even for us old-timers! Hope that you enjoyed reading this series of post as much as I enjoyed writing them. I think the list is pretty complete, but if you have other problem areas you think should have made the list, please let me know. I’d love to hear from you.

Here are the links to my prior posts summarizing Jack’s Reasons 10 and 9, Reasons 7 and 8, and Reasons 6 and 5, and Reasons 4 and 3.