As a long-term partner with Texas Instruments – we’ve been a Platinum Partner of the TI Design Network since 2008 – those of us at Critical Link are always interested to see what they’re up to. And as a car aficionado, the recent news about what they’ve been up to in the automotive world had special resonance for me. What they’re up to is improvements to radar.

Those who follow automotive technology news are, of course aware of the problems associated with automotive radar systems:

Traditional radar lacks resolution and can’t distinguish nearby objects. Radars are also known to sound false alarms and they consistently fail to process information fast enough to be helpful on the highway. (Source: EE Times)

On the plus side of the equation, radar tends to work well in poor weather – something that is especially important to those of us who live (and drive) under some pretty extreme weather conditions come Lake Effect Snow season. Because radar’s all-weather capabilities are so worthwhile, automotive technologists have looked to make up for the resolution problems by pairing radar with vision sensors.

TI jumped in about a year ago with:

…millimeter-wave radar chips built on standard in-house RF CMOS technology. Introduced a year ago, TI’s radar chips offer “less than 5-cm resolution accuracy, range detection to hundreds of meters, and velocity of up to 300 km/h,” according to the company.

They’ve been out with this technology for about a year, the AWR1642 mmWave are in mass production, and adoption is taking off.

Sameer Watson is TI’s GM for radar and analytic processors. He has said:

…that he expects to see TI’s radar chips inside OEMs’ vehicles “at the end of this year to mid-2019.”

(TI also has a radar chip, the IWR1642 mmWave that are designed for industrial apps.)

Given our roots – and appreciation for DSP technology – we’re happy to see that DSP cores, along with a microcontroller, are integrated with the radar.

The integration of the DSP turns out to be critical. It gives an almost 60% footprint reduction by improving power consumption, noted [industry analyst Cedric] Malquin. Furthermore, the DSP is central to “the signal processing chain to detect and classify an object.”

Indeed, Wasson noted that the DSP inside TI’s mmWave sensors makes it possible to classify and track objects and count people, for example. “The DSP enables users to place machine learning at the edge,” he said.

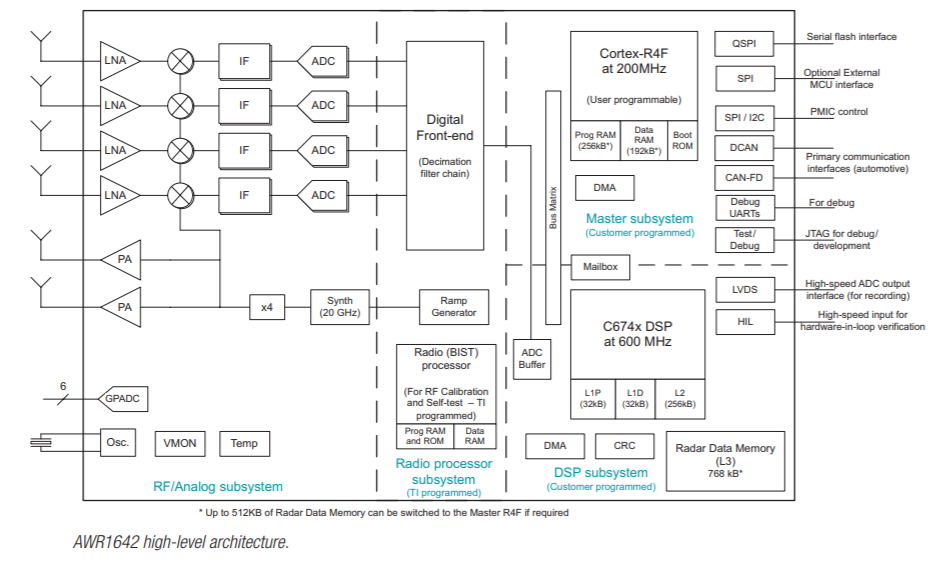

The DSP being deployed here is TI’s C674x DSP (600 MHz).

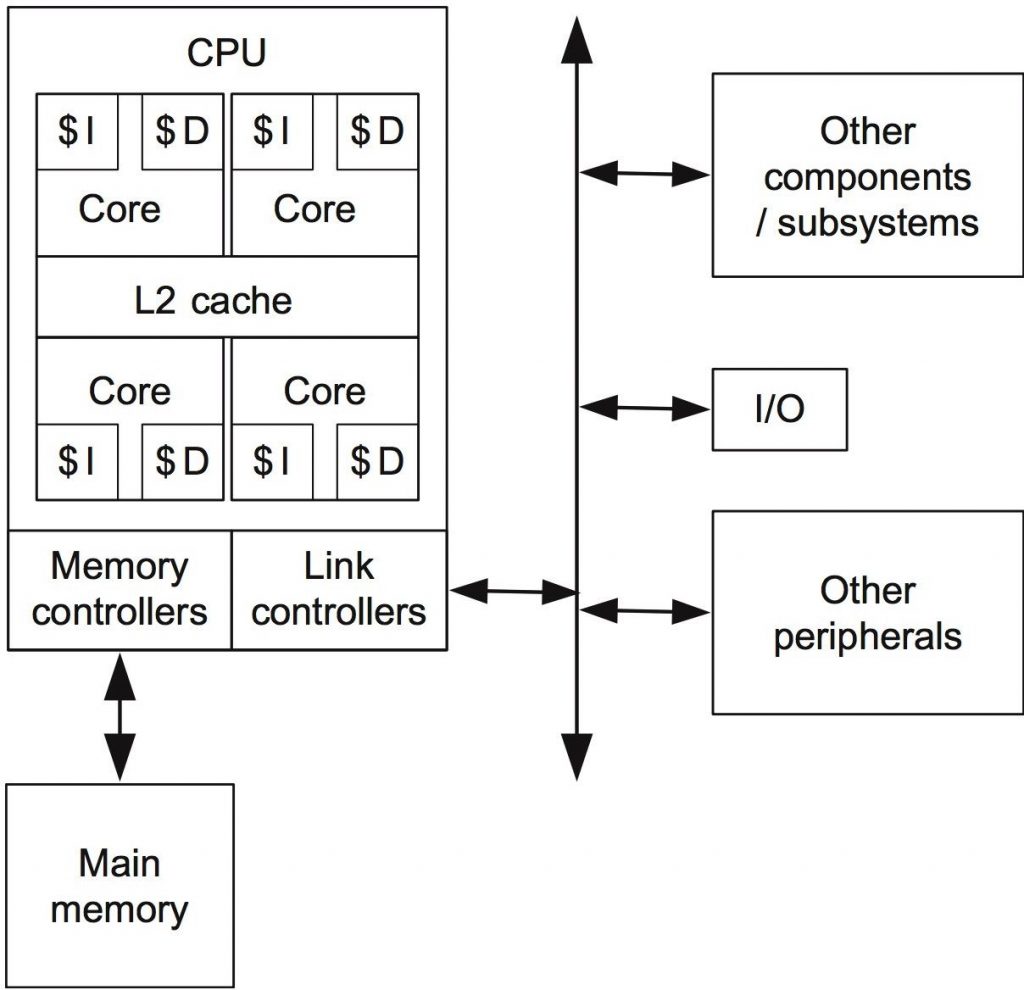

Here’s the schematic for the chip:

TI is merging radar with imaging, and imaging radar will use the radio wave data it acquires to create images.

Amazing the strides automotive technology has made over the past few years. Those autonomous vehicles are closer than they appear.

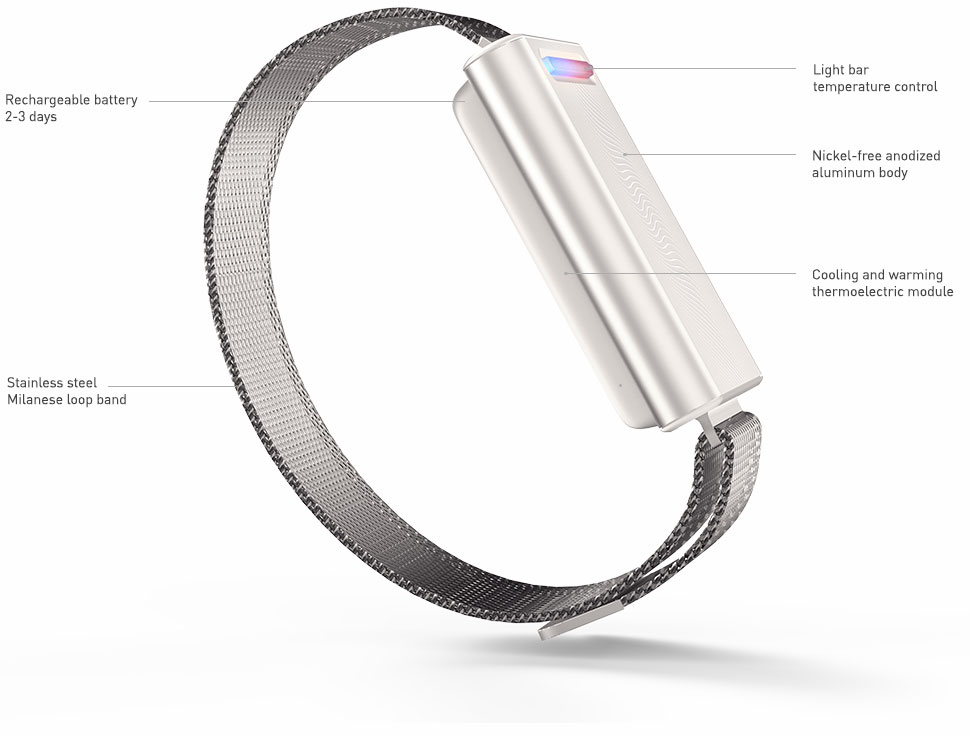

ever run cold water over your wrists on a hot day and felt instantly better, that’s how the Wave works.

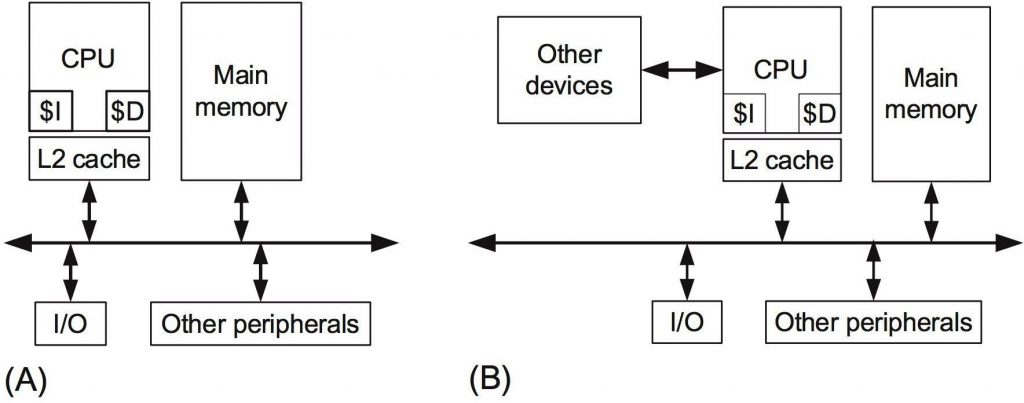

ever run cold water over your wrists on a hot day and felt instantly better, that’s how the Wave works. Over the past couple of months, we’ve been running a series summarizing the excerpts taken from Embedded Computing for High Performance by João Cardoso, José Gabriel Coutinho, and Pedro Diniz that embedded.com has published. The context for the book (and this series) is the growing importance and ubiquity of embedded computing in the age of the Internet of Things – a topic that seems to grow more important by the day. Earlier posts in this series focused on target architectures and multiprocessor and multicore architectures; core-based architectural enhancement and hardware accelerators; and performance. In this installment, we cover power and energy consumption.

Over the past couple of months, we’ve been running a series summarizing the excerpts taken from Embedded Computing for High Performance by João Cardoso, José Gabriel Coutinho, and Pedro Diniz that embedded.com has published. The context for the book (and this series) is the growing importance and ubiquity of embedded computing in the age of the Internet of Things – a topic that seems to grow more important by the day. Earlier posts in this series focused on target architectures and multiprocessor and multicore architectures; core-based architectural enhancement and hardware accelerators; and performance. In this installment, we cover power and energy consumption.

al lithography runs its course.

al lithography runs its course.