Last week, we began summarizing Rich Quinnell’s EE Times article on the new technologies that caught his eye at the recent ARM TechCon Conference. That post covered the first five of Rich’s “ten most intriguing technologies.” Here we’ll take care of the second half of the list.

Private LoRa networks

Multi-Tech is seeing a lot of businesses that are implementing private LoRa (Long Range wireless) networks, rather than working over public ones for their IoT and Machine-to-Machine communications. To take advantage of this:

“Multi-Tech has created a ruggedized base station that supports thousands of end nodes within an area up to 10 miles in radius, and ties that local cell into the wide area network. This architecture allows users to create private LoRa networks within, say, a building or a corporate campus, then tie those networks together through the cloud. The result is the ability to deploy and manage a large number of IoT devices that are geographically diverse, in a private network.

More news: the LoRa Alliance may be “working on a roaming strategy for [its] wireless standard that will allow devices to switch from private to public networks and back again, and among carriers. Sounds interesting. (We’ll be staying tuned.)

Modular design prototyping system

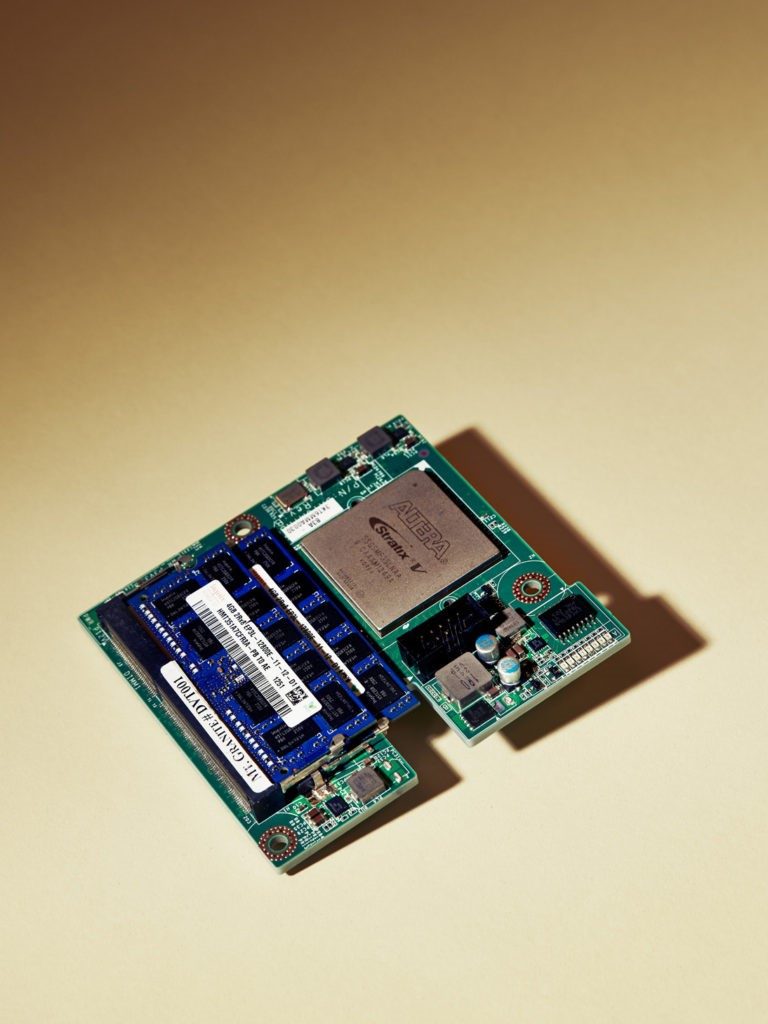

ProDesign Electronic is offering its ProFPGA prototyping system. This will let developers build SoC prototypes that will enable them to test system software even before the silicon’s available. There will be multiple baseboard options (one-, two-, and four-slot)

“…into which they can plug any of several FPGA modules to build up their design. The modules can then connect together to share high-speed signals, as can up to five baseboards, to build up the resources needed to prototype a full design. Each FPGA module can also accept several of 60 different IO modules to handle this functionality without consuming FPGA resources.

This should make for faster prototyping than using a simulation.

Sensor-based IoT

Would developers be “inspired” to create sensor-based IoT devices if it were made simpler? Silicon Labs apparently thinks so. They’re introducing the Thunderboard React kit.

“In addition to the demo software, which works right out of the box, ‘the kit makes all the mobile app, cloud service, and device code available to developers’.”

The board is based on a Silicon Lab’s own Bluetooth Smart radio module, “and a collection of the company’s sensors to provide both a demonstration platform and a prototyping vehicle.”

Ultrasound creates tangible virtual objects

Apparently it wasn’t demo’d on the conference show floor, but Ultrahaptics had an offsite demo of its virtual object creation system.

“This innovative technology uses a phased array of standard ultrasonic rangefinders to create pressure at up to four arbitrary points within a conical volume above the array. By refreshing the points’ locations at speeds to 20 kHz, the array can create the illusion of a physical object in mid-air. The sensation is light (like a soap bubble bursting) but quite noticeable. The aim is give gesture control systems a physical presence to help guide user interaction. Knobs, buttons, sliders, and the like are readily created using the array, as are a variety of surface textures.

A development kit for those interested will be available after the first of the year.

The Zephyr Project aims to bring real-time to the IoT

Last but not least on Rich’s list is the Zephyr Project, an RTOS for IoT device developers.

“This open-source, community-created code is available through the Linux Foundation under the permissive Apache 2.0 license to make it as uncomplicated to use and deploy as possible. “

The aim? “’To be the Linux of microcontrollers.’”

Sounds like ARM TechCon was, as always, plenty interesting. Thanks again to Rich for sharing his findings and insights.

A friend of mine recently received an invitation to attend a demo of the Steinway Spirio, a high-res player piano. Anyway, my friend wasn’t able to go and see Spirio for herself, but she thought I might be interested in it from a technical standpoint. So I did a bit of digging around to see how Spirio works.

A friend of mine recently received an invitation to attend a demo of the Steinway Spirio, a high-res player piano. Anyway, my friend wasn’t able to go and see Spirio for herself, but she thought I might be interested in it from a technical standpoint. So I did a bit of digging around to see how Spirio works.

measurement challenges that can only be achieved with high-performance, usually custom hardware.” Ushani believes that “software is the key to the progress of any high-tech device and, in general, the future technological revolutions. Clearly, the test and measurement industry is no exception.” I guess it’s no surprise that, as an older hand, I’m with Witte that hardware still matters.

measurement challenges that can only be achieved with high-performance, usually custom hardware.” Ushani believes that “software is the key to the progress of any high-tech device and, in general, the future technological revolutions. Clearly, the test and measurement industry is no exception.” I guess it’s no surprise that, as an older hand, I’m with Witte that hardware still matters. available to enable developers to build reliable control and DSP embedded applications.”

available to enable developers to build reliable control and DSP embedded applications.” them—with specialized chips that the company could reprogram for particular tasks.”

them—with specialized chips that the company could reprogram for particular tasks.” ents.

ents.